先做个广告:需要购买Gemini帐号或代充值Gemini会员,请加微信:gptchongzhi

引言

Google最新发布关于Gemini的更新有2点比较吸引我,一是Gemini 1.5 Flash大降价,二是Gemini API 和 AI Studio 现在支持通过文本和视觉双重方式理解 PDF 内容。

推荐使用Gemini中文版,国内可直接访问:https://ai.gpt86.top

推荐使用Gemini中文版,国内可直接访问:https://ai.gpt86.top

如果你的PDF包含图表、图像或其他非文本的视觉内容,该模型会使用原生的多模态能力来处理PDF。

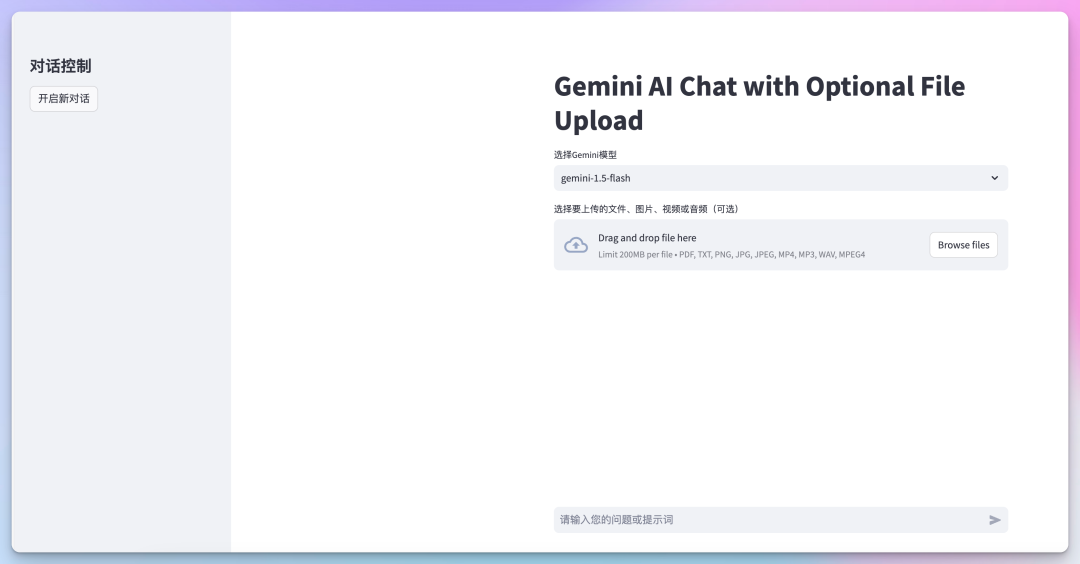

我把编写的调用Gemini API的Streamlit应用完整代码放在文章最后,支持视频、音频、图片、PDF、文字对话。

完整代码

import os

import time

import streamlit as st

import google.generativeai as genai

from dotenv import load_dotenv

import tempfile

from PIL import Image

# Load environment variables

load_dotenv()

# Configure Gemini API

genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))

def upload_to_gemini(file, mime_type=None):

"""Uploads the given file to Gemini."""

with tempfile.NamedTemporaryFile(delete=False, suffix=f".{file.name.split('.')[-1]}") as tmp_file:

tmp_file.write(file.getvalue())

tmp_file_path = tmp_file.name

try:

gemini_file = genai.upload_file(tmp_file_path, mime_type=mime_type)

st.success(f"Uploaded file '{gemini_file.display_name}' as: {gemini_file.uri}")

return gemini_file

finally:

os.unlink(tmp_file_path)

def wait_for_file_processing(file):

"""Wait for the file to be processed by Gemini."""

max_attempts = 30

for attempt in range(max_attempts):

file_info = genai.get_file(file.name)

if file_info.state.name == "ACTIVE":

return True

elif file_info.state.name == "FAILED":

st.error(f"File processing failed: {file.name}")

return False

time.sleep(2)

st.error(f"File processing timed out: {file.name}")

return False

def get_gemini_model(model_name):

"""Creates and returns the Gemini model."""

generation_config = {

"temperature": 0.7,

"top_p": 1,

"top_k": 32,

"max_output_tokens": 8192,

}

safety_settings = [

{

"category": "HARM_CATEGORY_HARASSMENT",

"threshold": "BLOCK_NONE"

},

{

"category": "HARM_CATEGORY_HATE_SPEECH",

"threshold": "BLOCK_NONE"

},

{

"category": "HARM_CATEGORY_SEXUALLY_EXPLICIT",

"threshold": "BLOCK_NONE"

},

{

"category": "HARM_CATEGORY_DANGEROUS_CONTENT",

"threshold": "BLOCK_NONE"

},

]

return genai.GenerativeModel(model_name=model_name,

generation_config=generation_config,

safety_settings=safety_settings)

def process_gemini_response(response):

"""Process the Gemini response and handle potential errors."""

if not response.candidates:

for rating in response.prompt_feedback.safety_ratings:

if rating.probability != "NEGLIGIBLE":

return f"Response blocked due to {rating.category} with probability {rating.probability}"

return "No response generated. Please try a different prompt."

if response.candidates[0].finish_reason == "SAFETY":

return "Response was blocked due to safety concerns. Please try a different prompt."

return response.text

def main():

st.title("Gemini AI Chat with Optional File Upload")

# Initialize session state

if "messages" not in st.session_state:

st.session_state.messages = []

if "uploaded_file" not in st.session_state:

st.session_state.uploaded_file = None

if "file_uploader_key" not in st.session_state:

st.session_state.file_uploader_key = 0

if "file_processed" not in st.session_state:

st.session_state.file_processed = False

# Sidebar

st.sidebar.title("对话控制")

# New conversation button in sidebar

if st.sidebar.button("开启新对话"):

st.session_state.messages = []

st.session_state.uploaded_file = None

st.session_state.file_uploader_key += 1

st.session_state.file_processed = False

st.rerun()

# Model selection

model_name = st.selectbox("选择Gemini模型", ["gemini-1.5-flash", "gemini-1.5-pro-exp-0801"])

# File upload

uploaded_file = st.file_uploader("选择要上传的文件、图片、视频或音频(可选)",

type=["pdf", "txt", "png", "jpg", "jpeg", "mp4", "mp3", "wav"],

key=f"file_uploader_{st.session_state.file_uploader_key}")

if uploaded_file and (not st.session_state.uploaded_file or uploaded_file.name != st.session_state.uploaded_file.name):

with st.spinner("正在上传并处理文件..."):

mime_type = uploaded_file.type

if mime_type.startswith('image'):

st.image(uploaded_file, caption="上传的图片", use_column_width=True)

elif mime_type.startswith('audio'):

st.audio(uploaded_file, format='audio/mp3')

file = upload_to_gemini(uploaded_file, mime_type=mime_type)

if wait_for_file_processing(file):

st.session_state.uploaded_file = file

st.session_state.file_processed = True

st.success("文件已准备就绪,可以开始分析!")

else:

st.error("文件处理失败。请重试。")

st.session_state.uploaded_file = None

st.session_state.file_processed = False

# Display chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Chat input

if prompt := st.chat_input("请输入您的问题或提示词"):

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

st.markdown(prompt)

model = get_gemini_model(model_name)

with st.chat_message("assistant"):

message_placeholder = st.empty()

full_response = ""

try:

if st.session_state.uploaded_file and st.session_state.file_processed:

response = model.generate_content([st.session_state.uploaded_file, prompt], stream=True)

else:

response = model.generate_content(prompt, stream=True)

for chunk in response:

chunk_text = chunk.text

full_response += chunk_text

message_placeholder.markdown(full_response)

except Exception as e:

st.error(f"发生错误: {str(e)}")

full_response = "抱歉,处理您的请求时出现了错误。请重试或开始新的对话。"

st.session_state.messages.append({"role": "assistant", "content": full_response})

if __name__ == "__main__":

main()

运行说明

要运行这个程序,需要遵循以下步骤:

环境准备:

确保您已安装Python(推荐3.9或更高版本)。 建议使用虚拟环境来管理依赖。 安装依赖:在命令行中运行以下命令安装所需的库:

pip install streamlit google-generativeai python-dotenv Pillow设置API密钥:

创建一个

.env文件在项目根目录下。在

.env文件中添加您的Google API密钥:GOOGLE_API_KEY=your_api_key_here保存代码:将提供的代码保存为一个Python文件,例如

app.py。运行应用:在命令行中,导航到包含

app.py的目录,然后运行:streamlit run app.py访问应用:Streamlit会在终端中提供一个本地URL(通常是

http://localhost:8501)。在浏览器中打开这个URL来使用应用

本文链接:https://google-gemini.cc/gemini_102.html

Gemni谷歌 Gemini ProGemini pro Api谷歌发布史上最强大模型gemini谷歌母公司宣布削减gemini成本是多少谷歌gemini内测谷歌的gemini是什么谷歌ai人工智能gemini谷歌称gemini开创原生多模态时代周鸿祎谈谷歌gemini